ISR and RSR

Relighting from a Single Image. Datasets and Deep Intrinsic-based Architecture, IEEE TMM 2025

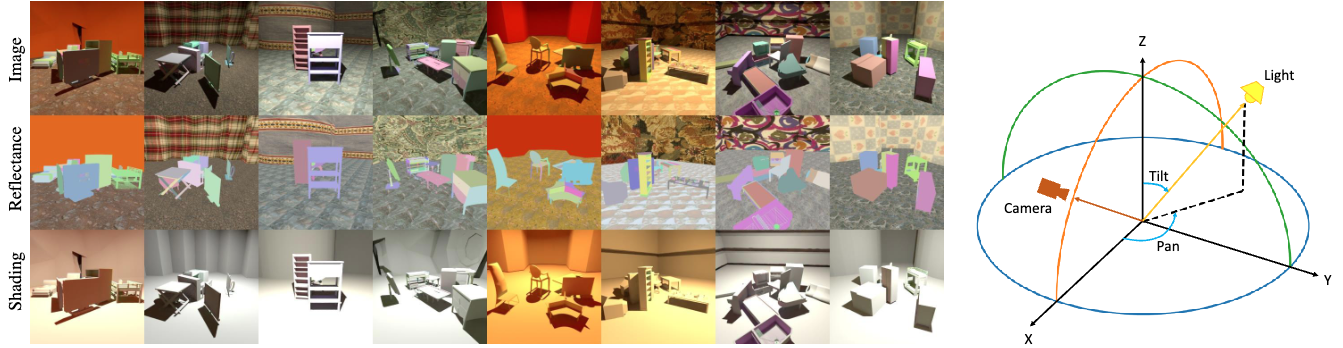

Single image scene relighting aims to generate a realistic new version of an input image so that it appears to be illuminated by a new target light condition. In our paper: “Relighting from a Single Image: Datasets and Deep Intrinsic-based Architecture”, we propose two new datasets: a synthetic dataset with the ground truth of intrinsic components (ISR) and a real dataset collected under laboratory conditions (RSR).

ISR: Intrinsic Scene Relighting Dataset

The images of this first dataset are generated by the opensource Blender rendering engine. This dataset includes intrinsic components in the grount truth. The ISR has 7801 scenes, each one under 10 different light conditions. Each scene has between 3 and 10 nonoverlapping objects which are randomly selected from various categories of the ShapeNet dataset including electronics, pots, buses, cars, chairs, sofas, and airplanes. For more details, please check our paper and GitHub repo.

The dataset can be downloaded separately by: Reflectance, Shading and Images. You can download only the Reflectance and Shading, as the image can be calculated from them in the code. Note: The name of the picture is: {Index of scene (part 1)}{Index of scene (part 2)}{pan}{tilt}{light temperature}_{not use}. The first two numbers represent the scene ID, while pan, tilt, and light temperature represent the lighting parameters.

RSR: Real scene relighting dataset

To validate the effectiveness of our method in real-world scenes, we developed the real scene-relighting (RSR) dataset. The RSR dataset is acquired in a lab with an automatic setup. We use a DSLR Nikon D5200 camera to capture the images. The scene area is lighted with a 3 × 3 multicolored led light matrix. The scene is set on a rotatable table. This acquisition system is shown in the below figure. Each scene is composed of a background and several objects. The library of backgrounds has 8 textured options. We carefully choose a set of 80 real-life objects sampling a wide range of materials and shapes. From glossy to matte materials and from natural to basic shapes. The objects are organized into 3 groups, 20 natural (organic and stone), 40 manufactured (plastic, paper, glass, or metal), and 20 basic (foam) shapes.

The dataset with original resolution can be downloaded here.

The dataset with 256x256 resolution can be downloaded here.

Note: 1) The name of the picture is: {index of picture}{index of group (different scene or different view)}{pan}{tilt}{R}{G}{B}{index of scene}{not use}{index of view}{index of light position}. The quantities that need attention are pan, tilt, and color (RGB), which represent the parameters of the light. 2) The order of the lights are as follow:

| 5 | 4 | 3 |

|---|---|---|

| 6 | 1 | 2 |

| 7 | 8 | 9 |