Evaluating Low-Light Image Enhancement Across Multiple Intensity Levels

MILL Dataset

Existing low-light image enhancement (LLIE) datasets have two critical limitations: they either contain a single severely underexposed image per scene or simulate brightness variations through camera parameter adjustments. This constraint limits real-world applicability, where low-light conditions span a continuous range of intensities. Our experiments show that models trained on fixed brightness levels fail to generalize across different intensities, producing artifacts like oversaturation when tested on intermediate brightness levels.

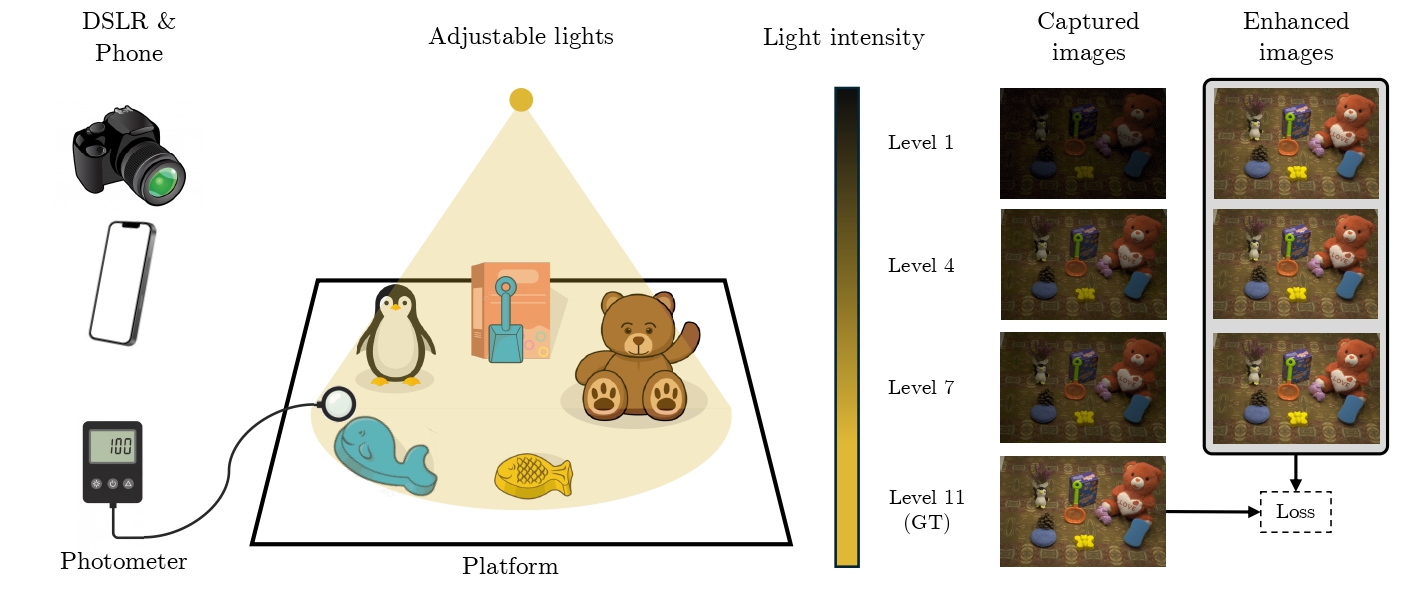

To address this limitation, we introduce the Multi-Illumination Low-Light (MILL) dataset featuring multiple brightness levels per scene with fixed camera parameters. All images were captured in a controlled indoor environment with programmable lighting to precisely control brightness levels. We used a Nikon D5200 DSLR camera and a Samsung Galaxy S7 smartphone, enabling evaluation across different sensor characteristics.

Dataset Composition: We captured 50 scenes across 11 intensity levels (Levels 1-10 plus ground truth), totaling 1,100 images. The dataset includes 6 different backgrounds and 98 unique objects, with no overlap between train/validation and test sets. The split comprises 30 training scenes, 12 validation scenes, and 8 test scenes.

Available Versions:

- MILL-s (small): All images resized to 600×400 pixels for methods with computational constraints

- MILL-f (full): DSLR images divided into 9 non-overlapping 2012×1340 patches (Full-HD resolution), expanding to 5,500 images; smartphone images at original 1560×1040 resolution

All images were captured in RAW format and processed using Camera RAW, ensuring high-quality, well-calibrated data for robust training and evaluation of LLIE methods across varying illumination conditions.

Dataset Examples

Explore example scenes at different illumination levels. Use the slider to adjust the brightness level and navigate between scenes.

Method Overview

Leveraging the unique multi-illumination structure of our dataset, we introduce two auxiliary loss terms to improve existing low-light image enhancement (LLIE) methods. Our approach explicitly disentangles the latent features into illumination-related and scene-related components, enabling models to better generalize across different brightness conditions.

Key Components

1. Intensity Prediction Loss (ℒi): We constrain the first latent feature channel to predict the normalized intensity value of the input scene. This loss minimizes the L1 distance between the predicted intensity at each spatial location and the known scene illumination level, ensuring that illumination information is explicitly encoded in the latent representation.

2. Scene Content Loss (ℒs): Using a triplet loss formulation, we encourage the remaining latent channels to encode scene content that is independent of lighting conditions. This loss pushes together representations of the same scene under different illumination levels, while separating representations of different scenes at the same brightness level.

3. Combined Objective: The complete loss function combines these components with a reconstruction loss (ℒre):

ℒ = ℒre + ℒi + ℒs

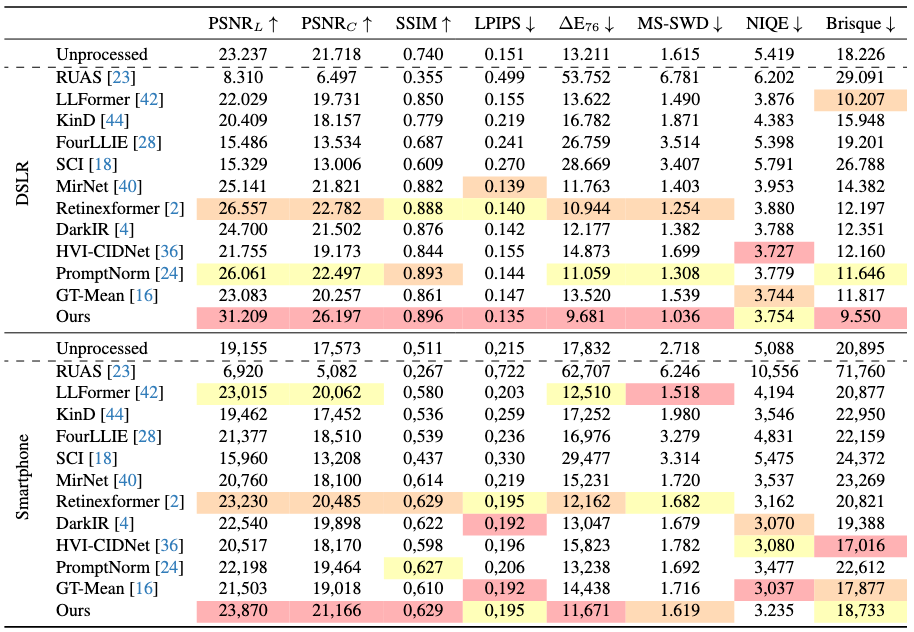

We adopt Retinexformer as our baseline architecture due to its strong performance in our benchmark evaluation. By explicitly disentangling illumination and scene content in the latent space, our method achieves up to 10 dB PSNR improvement for DSLR and 2 dB for smartphone images compared to the baseline.

Results

We benchmark mainstream LLIE methods by retraining them on our MILL-s dataset using their officially released code. For further analysis and details, please check the paper.

Citation

If you find this work useful for your research, please cite:

@article{pilligua2025evaluating,

title={Evaluating Low-Light Image Enhancement Across Multiple Intensity Levels},

author={Pilligua, Maria and Serrano-Lozano, David and Peng, Pai and Baldrich, Ramon and Brown, Michael S and Vazquez-Corral, Javier},

journal={arXiv preprint arXiv:2511.15496},

year={2025}

} Acknowledgments

This work was supported by Grants PID2021-128178OB-I00 and PID2024-162555OB-I00 funded by MCIN/AEI/10.13039/ 501100011033 and by ERDF "A way of making Europe", and by the Generalitat de Catalunya CERCA Program. DSL also acknowledges the FPI grant from Spanish Ministry of Science and Innovation (PRE2022-101525). JVC also acknowledges the 2025 Leonardo Grant for Scientific Research and Cultural Creation from the BBVA Foundation. The BBVA Foundation accepts no responsibility for the opinions, statements and contents included in the project and/or the results thereof, which are entirely the responsibility of the authors. This research was also supported by the Natural Sciences and Engineering Research Council of Canada (NSERC) and the Canada Research Chairs (CRC) program.